1. Spectral types collected from literature:

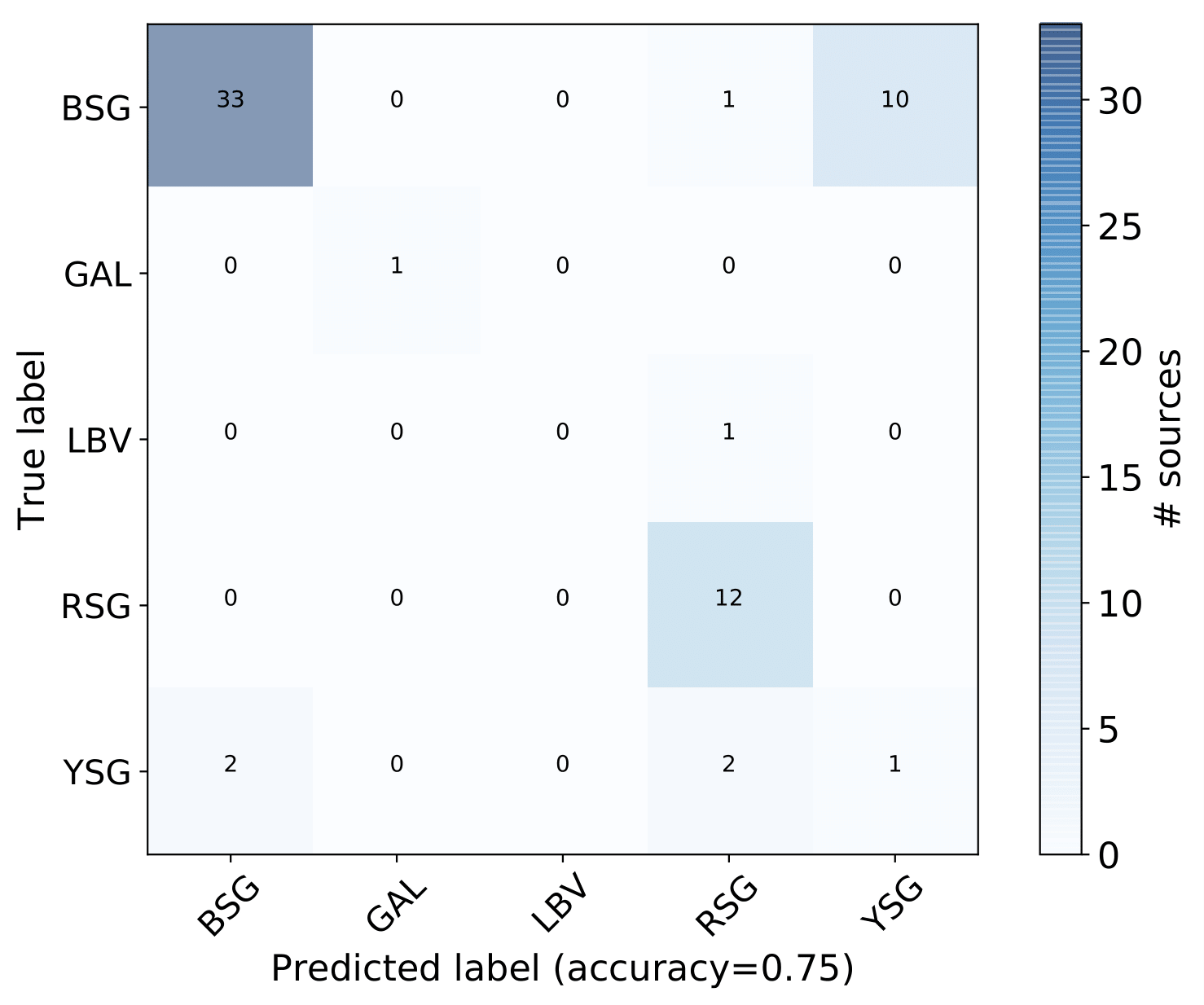

training sample: M31 (1142 sources) and M33 (1388 sources)

test sample: WLM (36 sources), IC 1613 (20 sources), Sextans A (16 sources)

2. Identifying foreground sources using Gaia DR2 astrometric information (corresponding to ~8% of the original sample).

3. Collecting photometric data:

- Spitzer mid-IR photometry (from catalogs that recently became public, which ensures the use of positions derived from a single instrument that helps with the crossmatching with other various works).

- Pan-STARRS optical photometry.

After the above screening our training sample consists of 527 M31 and 563 M33 sources.

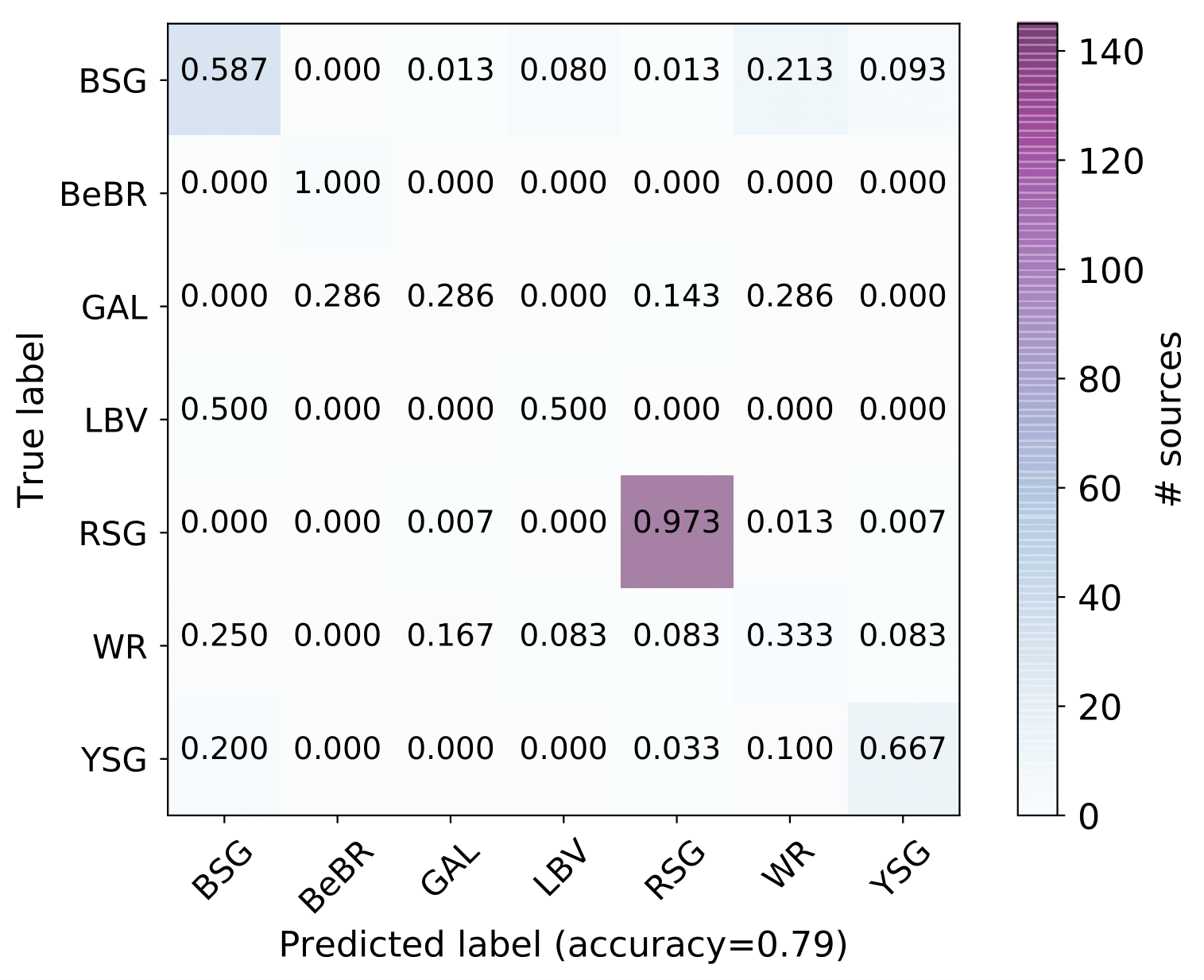

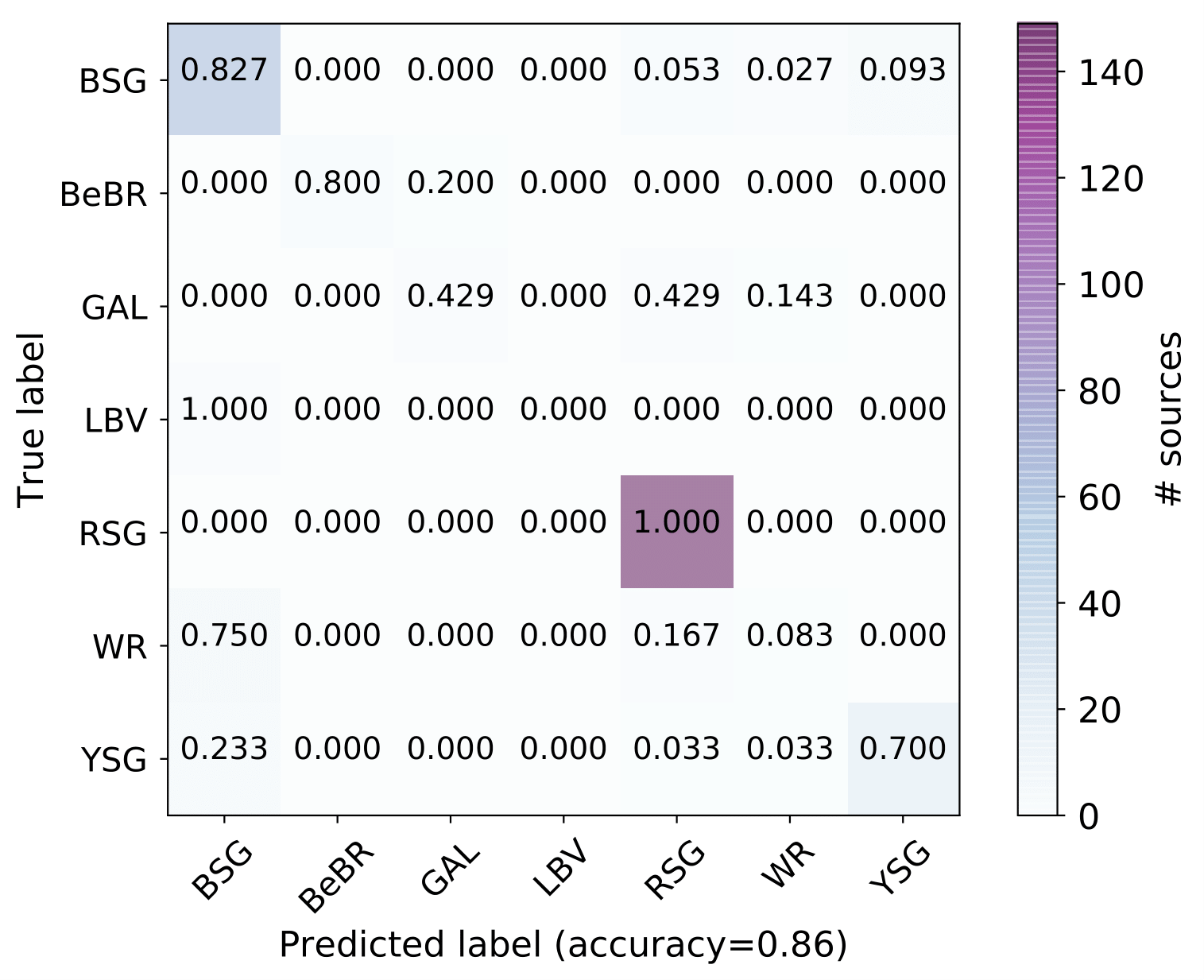

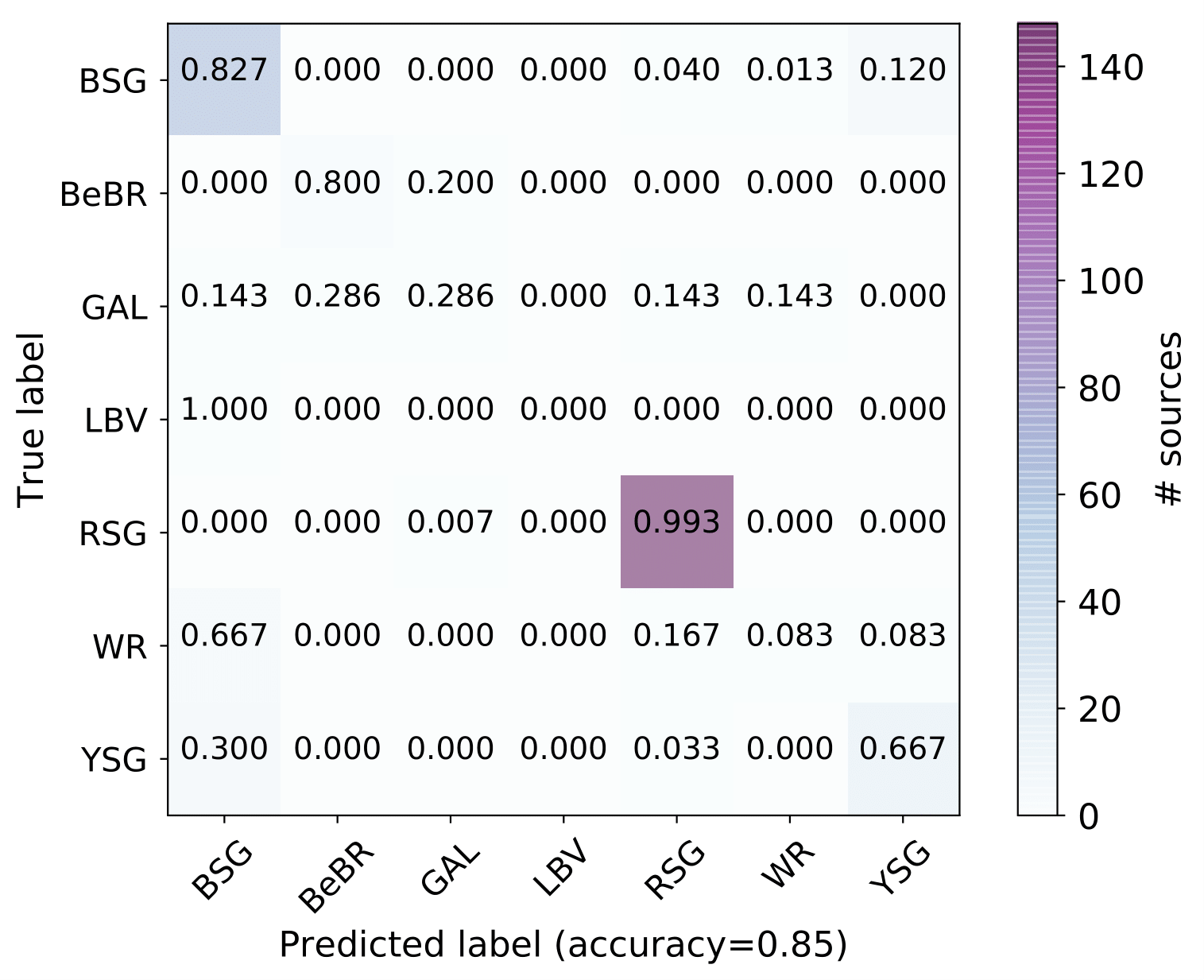

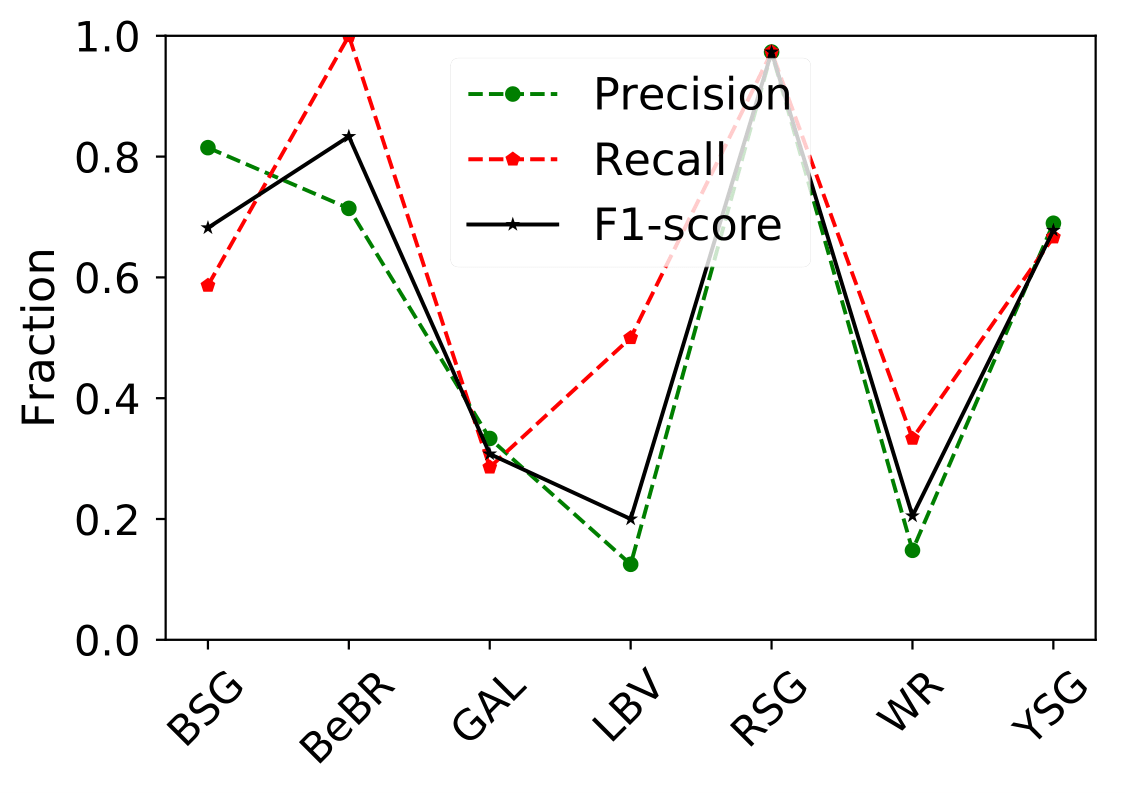

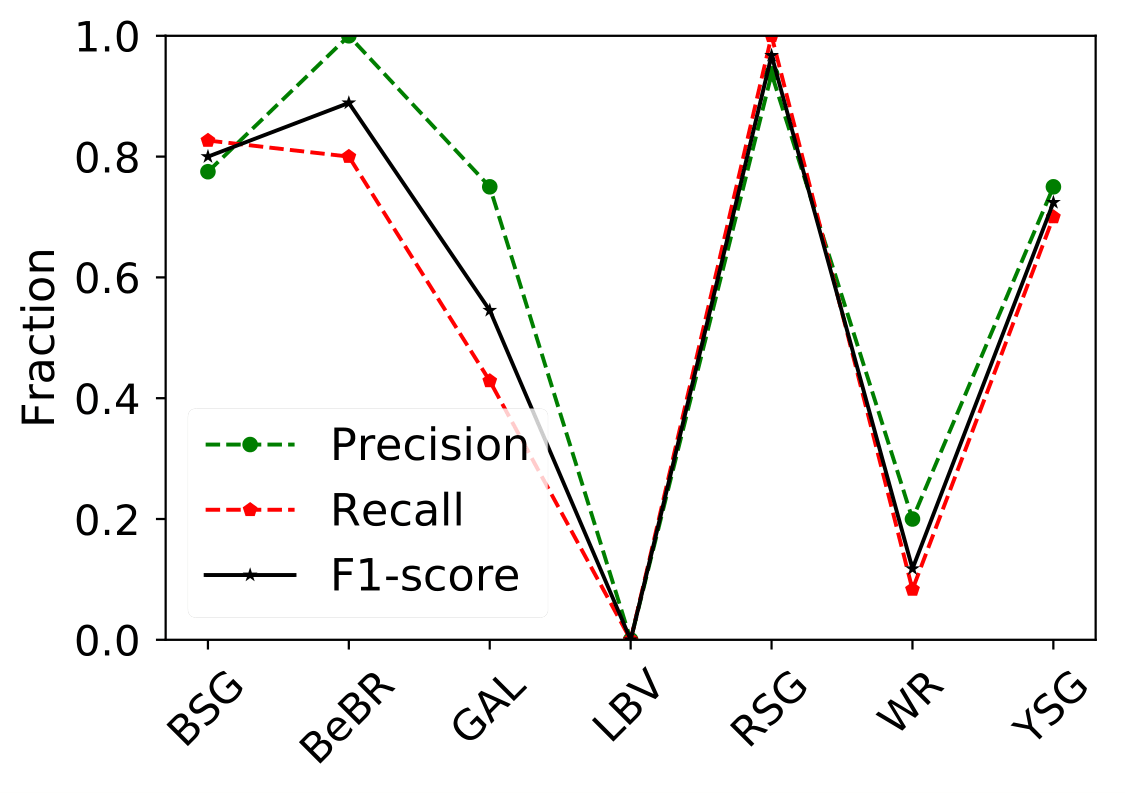

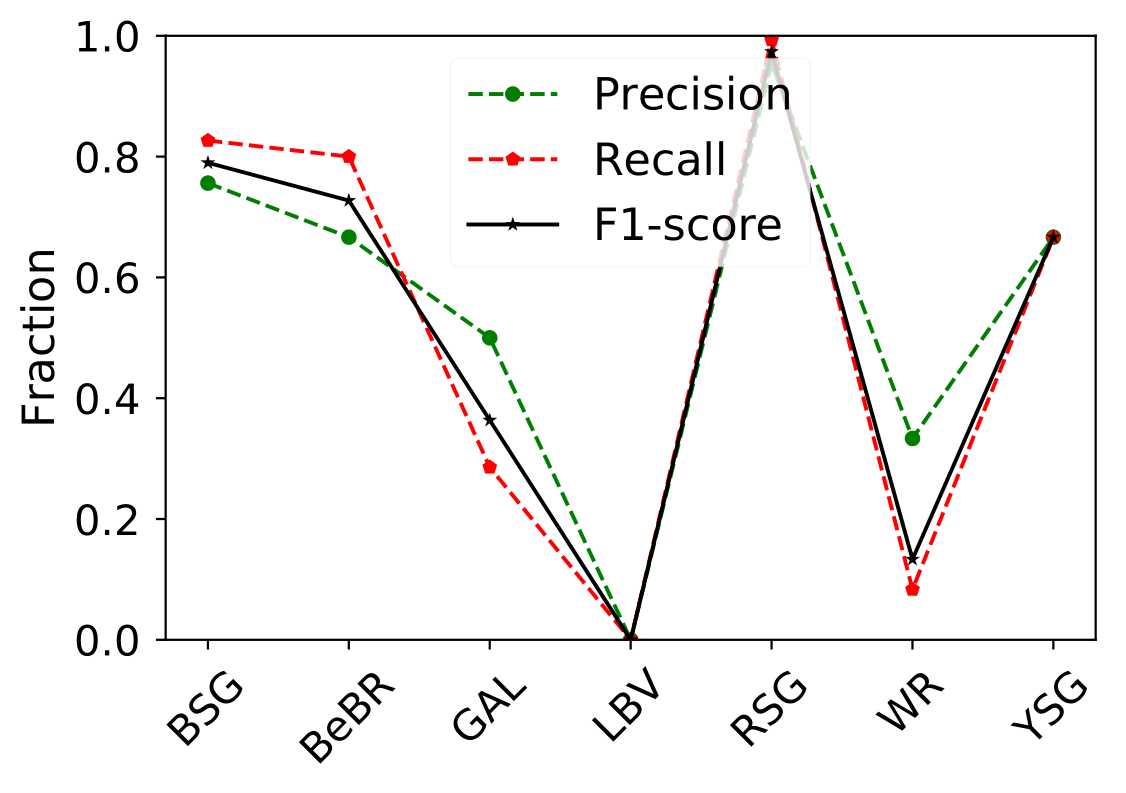

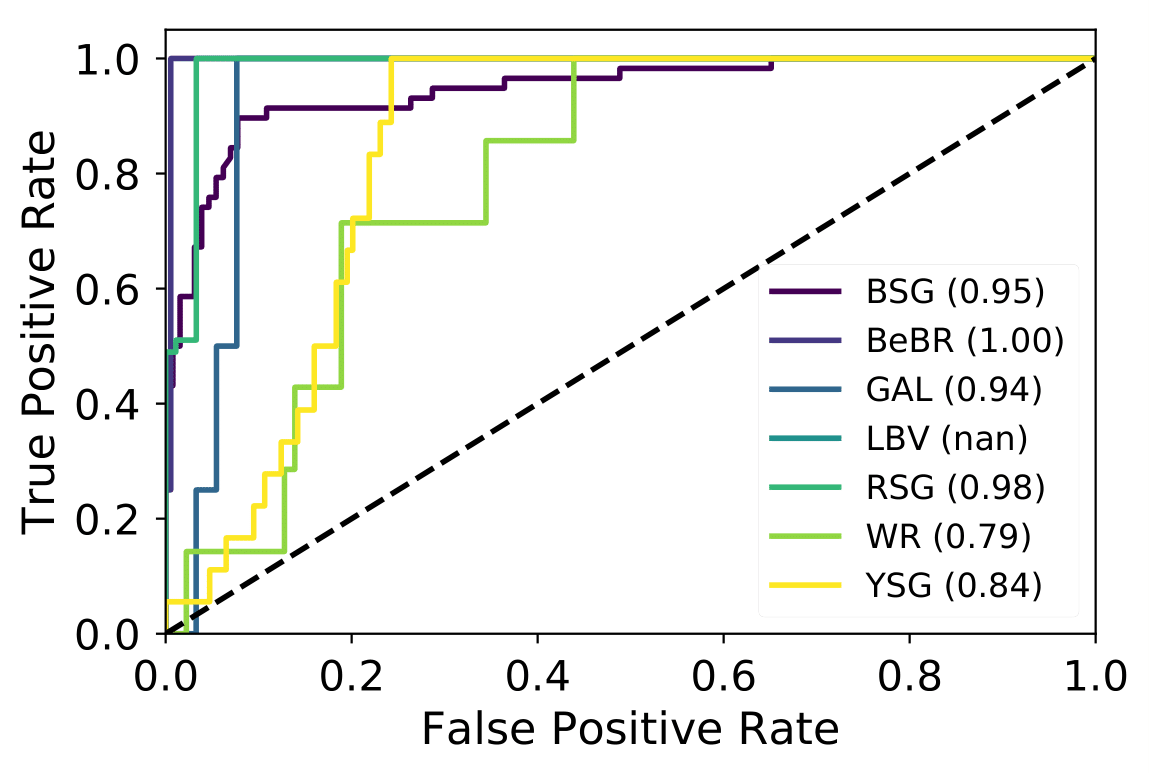

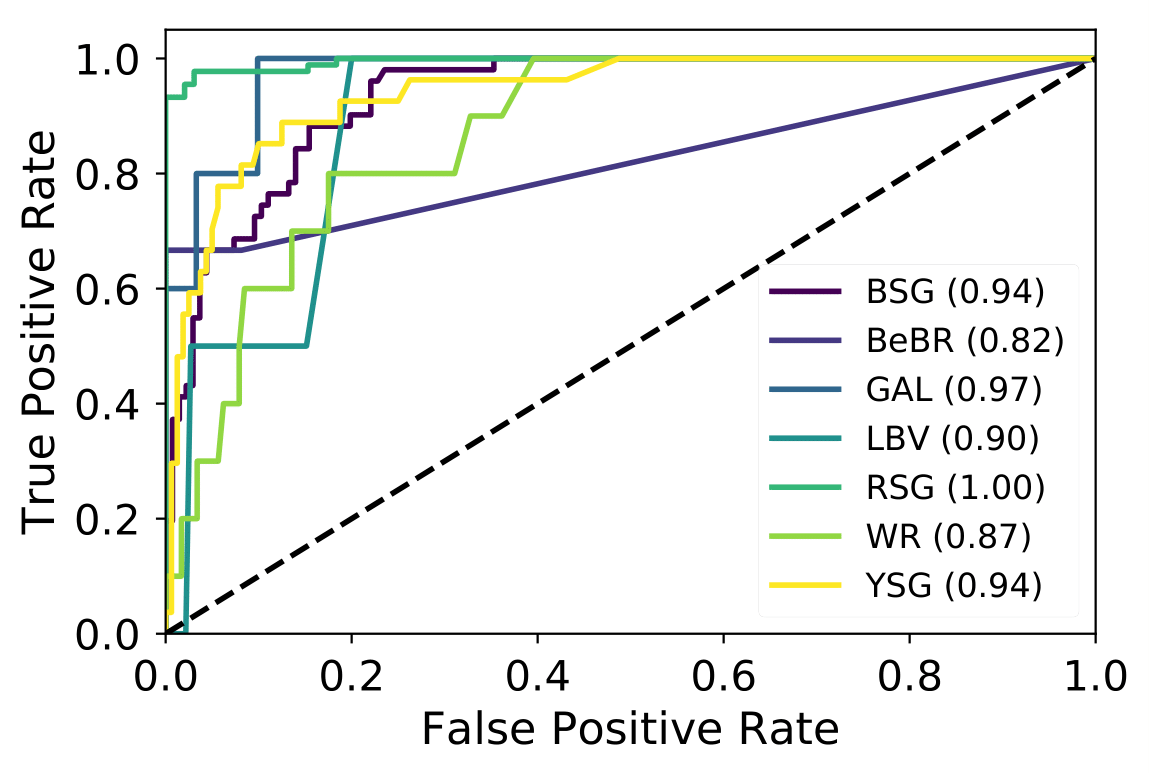

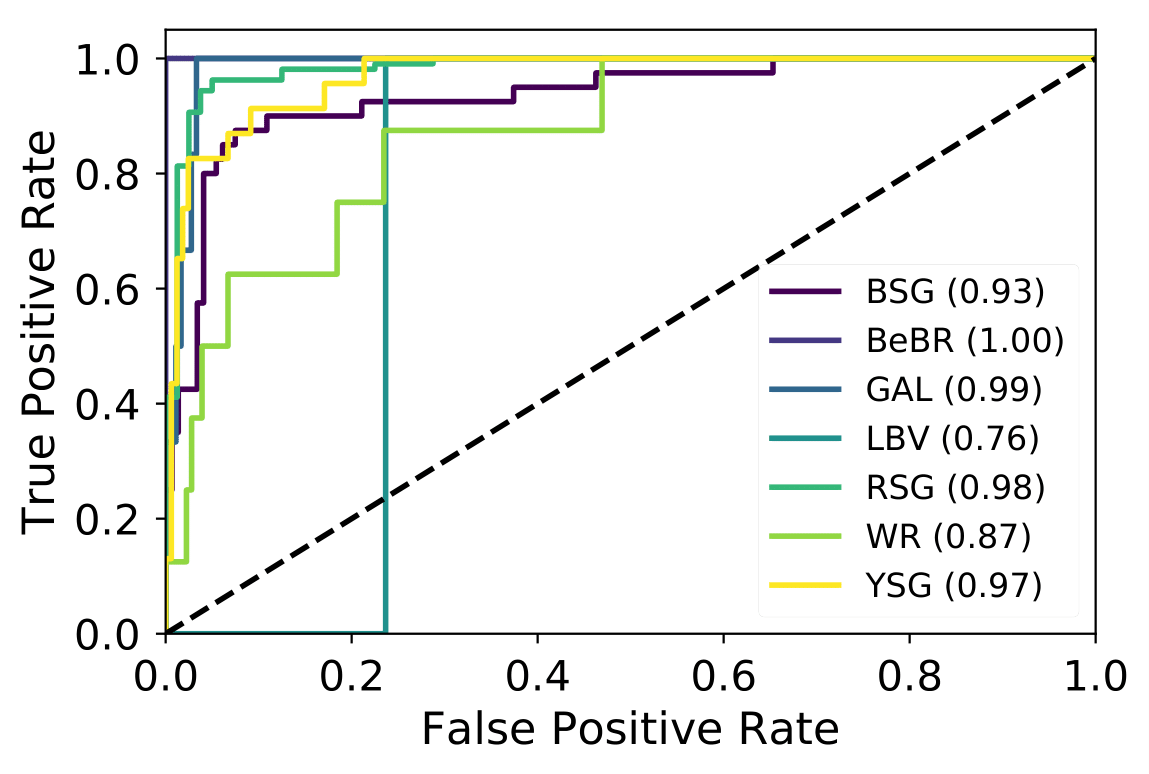

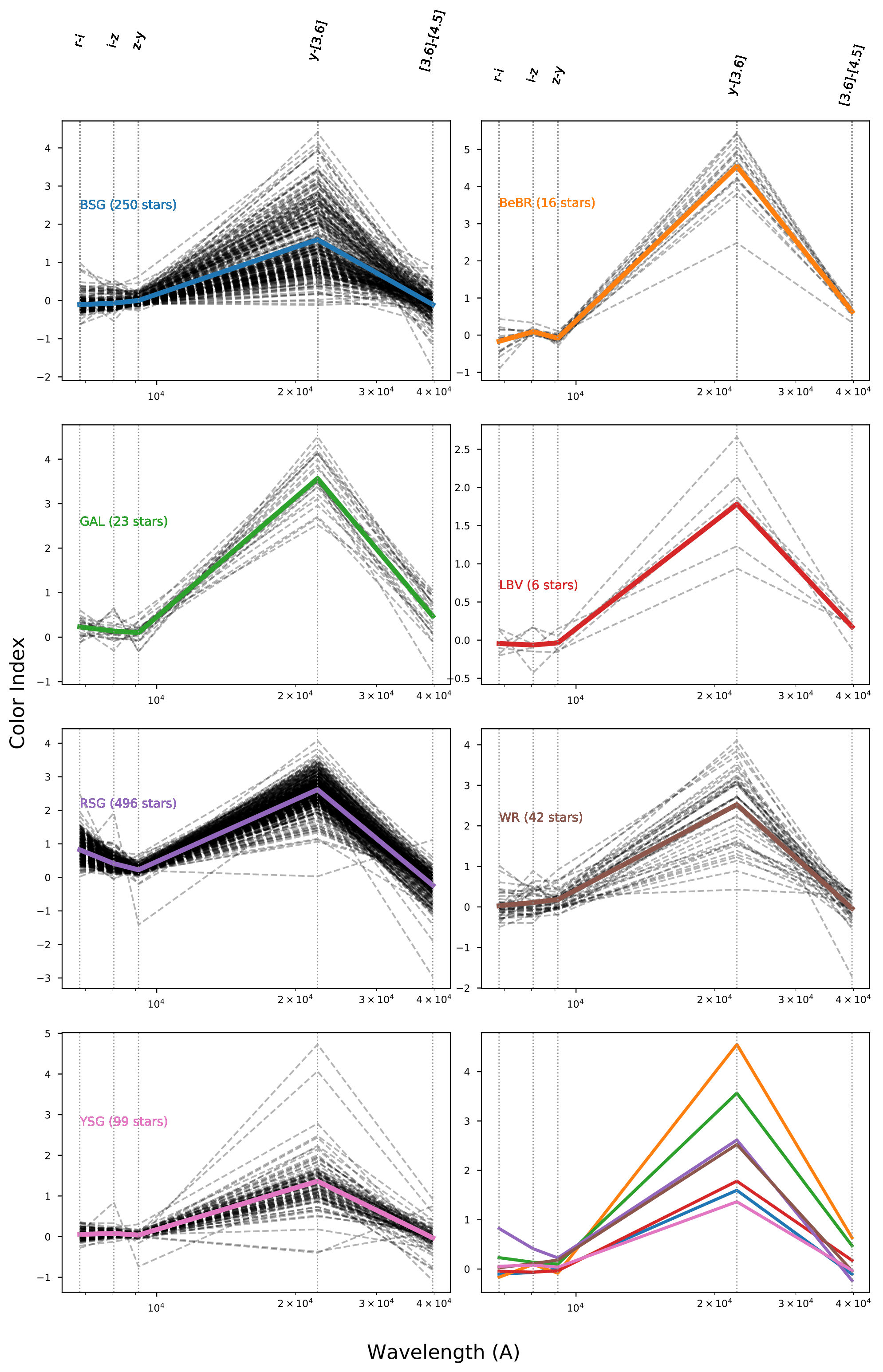

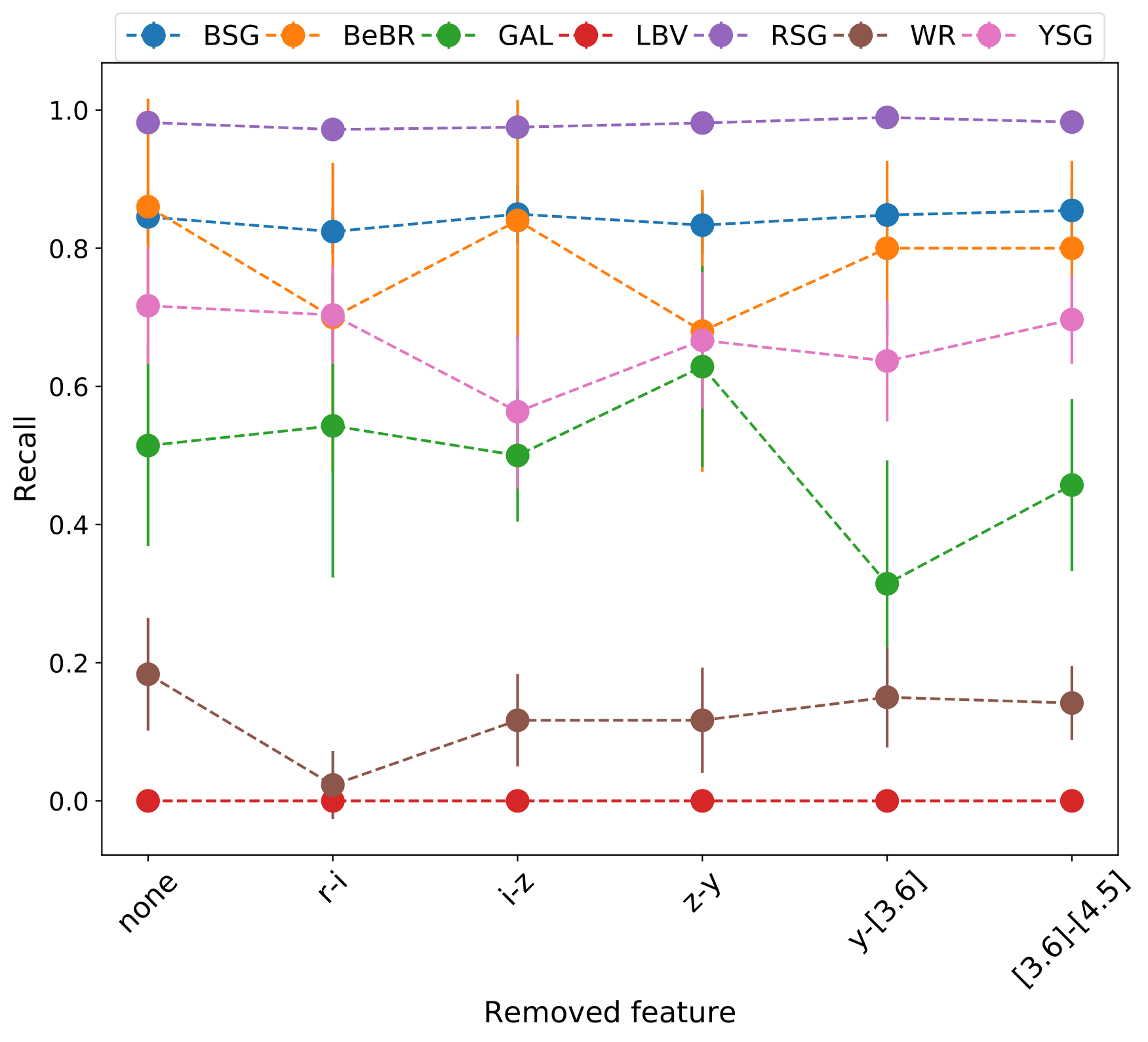

From the 1089 sources we removed 12 with ambiguous classification. All these sources correspond to 35 spectral classifications (with a handfull of objects respresenting some of these). To improve the efficiency of the classifier we grouped sources into wider classes, excluding mainly sources with uncertain spectral types. Our classification scheme consists of 7 classes that correspond to :

- BSG : main-sequence and evolved OBA stars, including those with emission (eg Be).

- WR : all types of (classical) Wolf-Rayet stars

- LBV : only secure Luminous Blue Variables

- BeBR : secure and candidate B[e] supergiants

- YSG : F and G stars, including those identified as Yellow Supergiants

- RSG : K and M stars, including Red Supergiants and those with a B companion (Neugent et al. 2019)

- GAL : AGN, QSO, and background galaxies - this class collects all outliers that would fit in the rest (as these will be the contaminant sources for our galaxies)

For supervised approaches we need to train in a well characterized sample. For this we need a complete set of photometry. The available bands in the optical and mid-IR are g, r, i, z, y and [3.6], [4.5], [5.8], [8.0], [24], respectively.

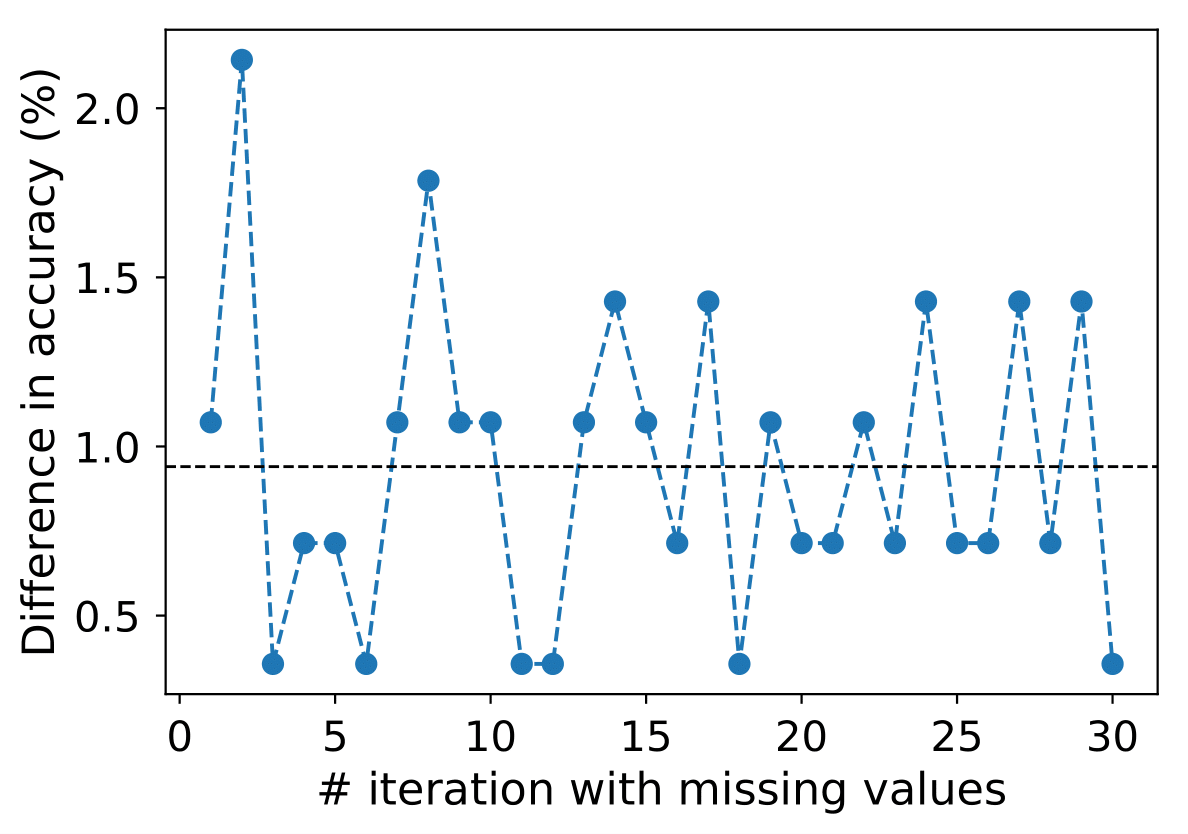

However, most sources have upper limits in [5.8], [8.0], and [24] which means that their values are not certain. Using those bands and rejecting sources with upper limits would decrease our training sample by half. We opted to disregard those bands. g band displays some issues for fainter objects and it is the one mostly affected by extinction. As we opted not to correct for extinction (due to lack of known extinction laws for most of our galaxies) we disregard it also.

Gaia photometry is also excluded based on the lack of enough sources measured in more distant galaxies.

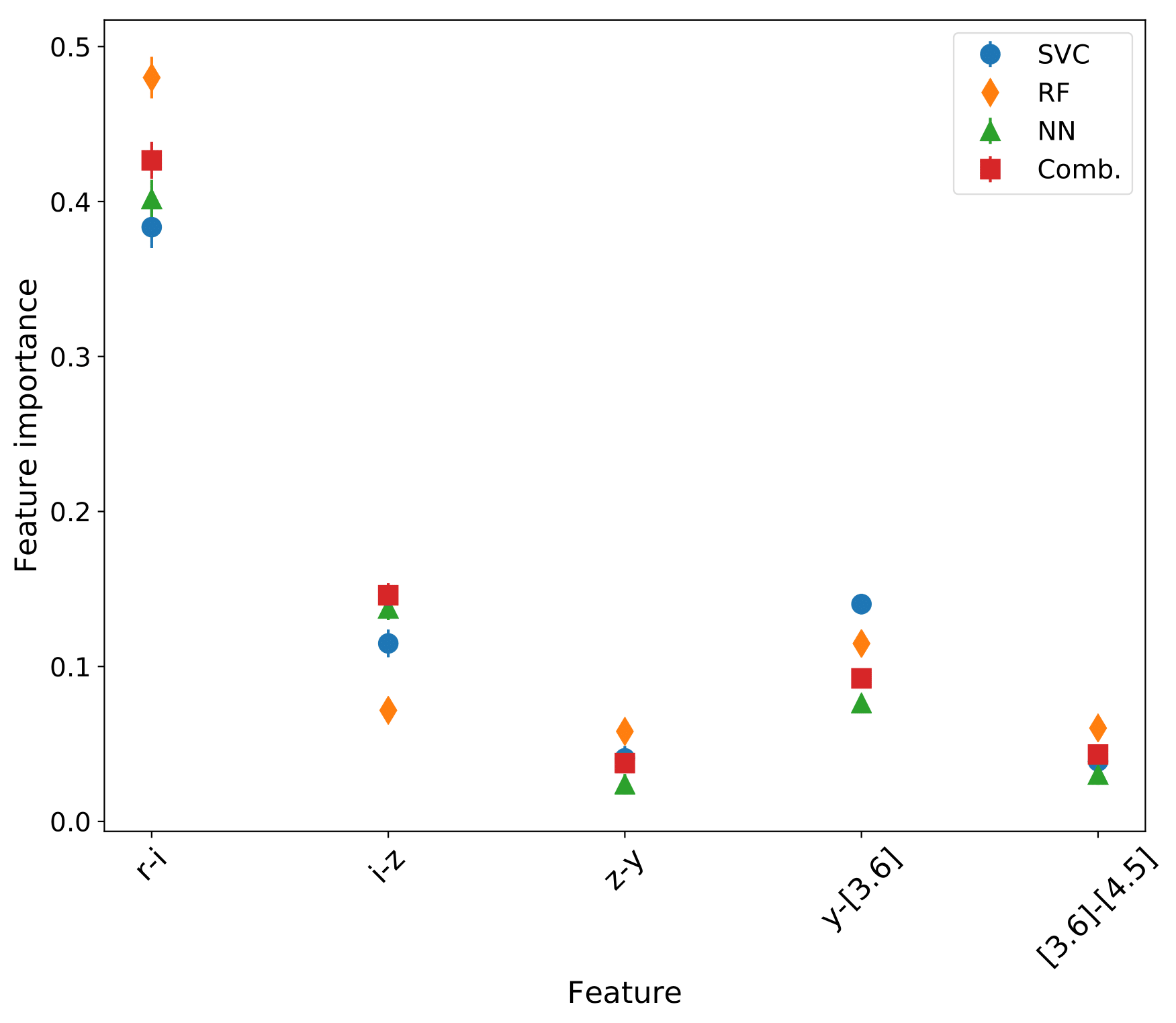

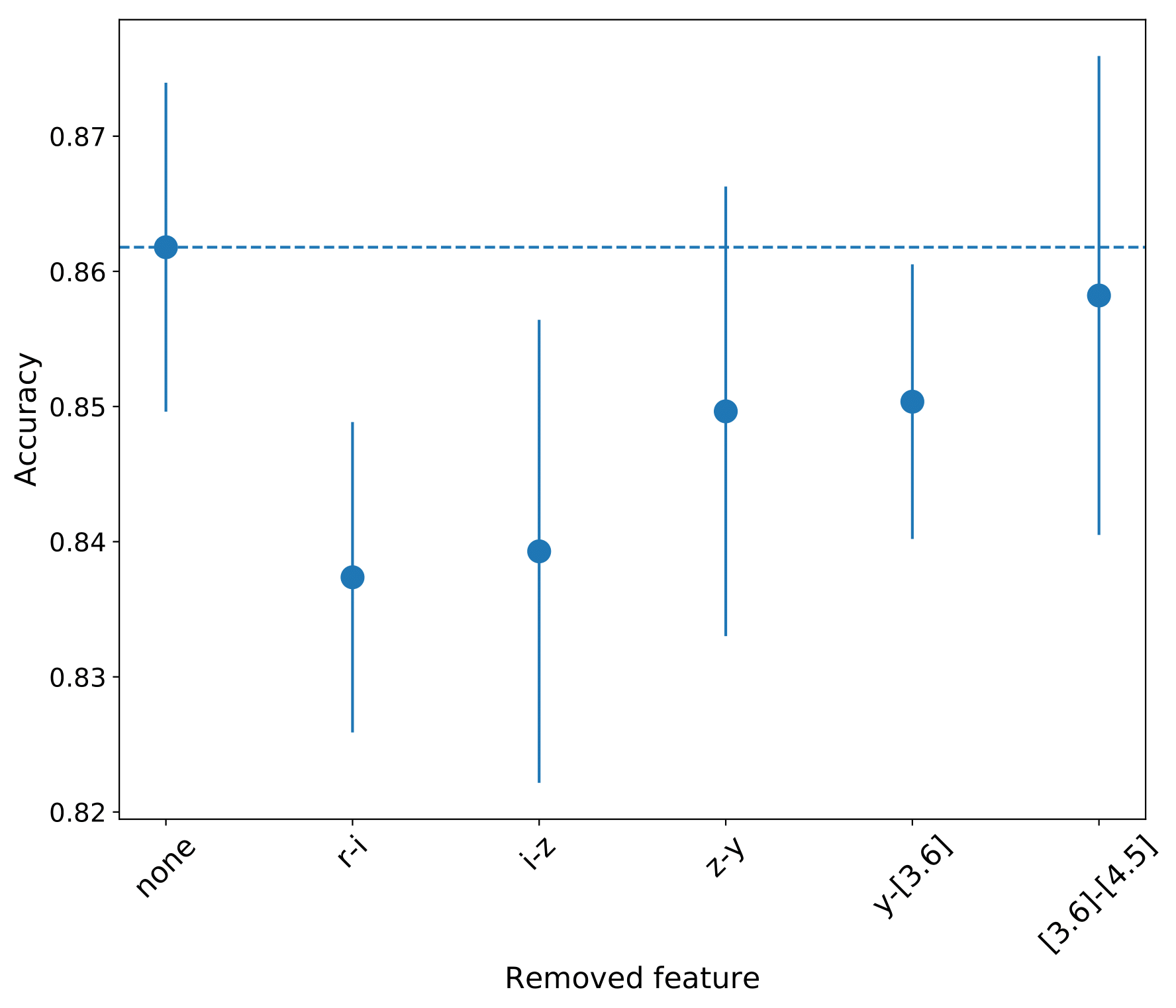

To remove any distance dependence we used the color terms of the bands used, i.e. : r-i, i-z, z-y, y-[3.6], [3.6]-[4.8], which is the simplest and most intuitive representation of the data1,2.

1 the above consequtive color indices are the ones that are affected less from extinction, less correlated, and help distinguish more some classes.

2 various experiments with different transformations of the data (e.g. normalized fluxes, standardizing data) did not result to any significant improvement.

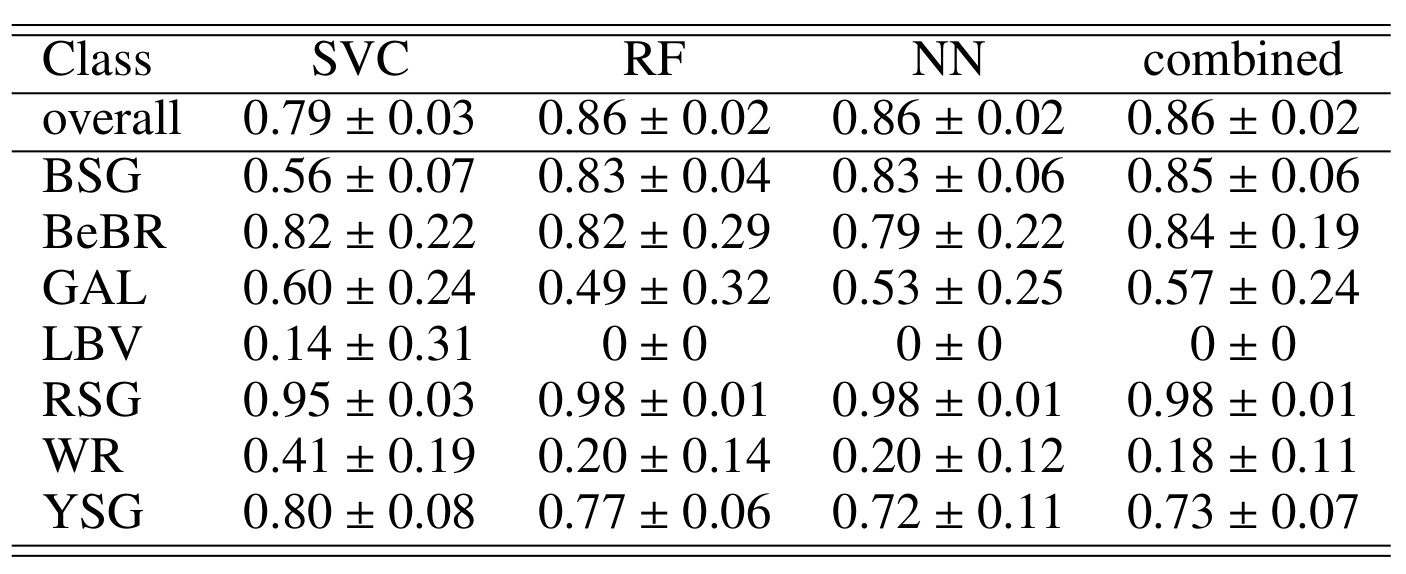

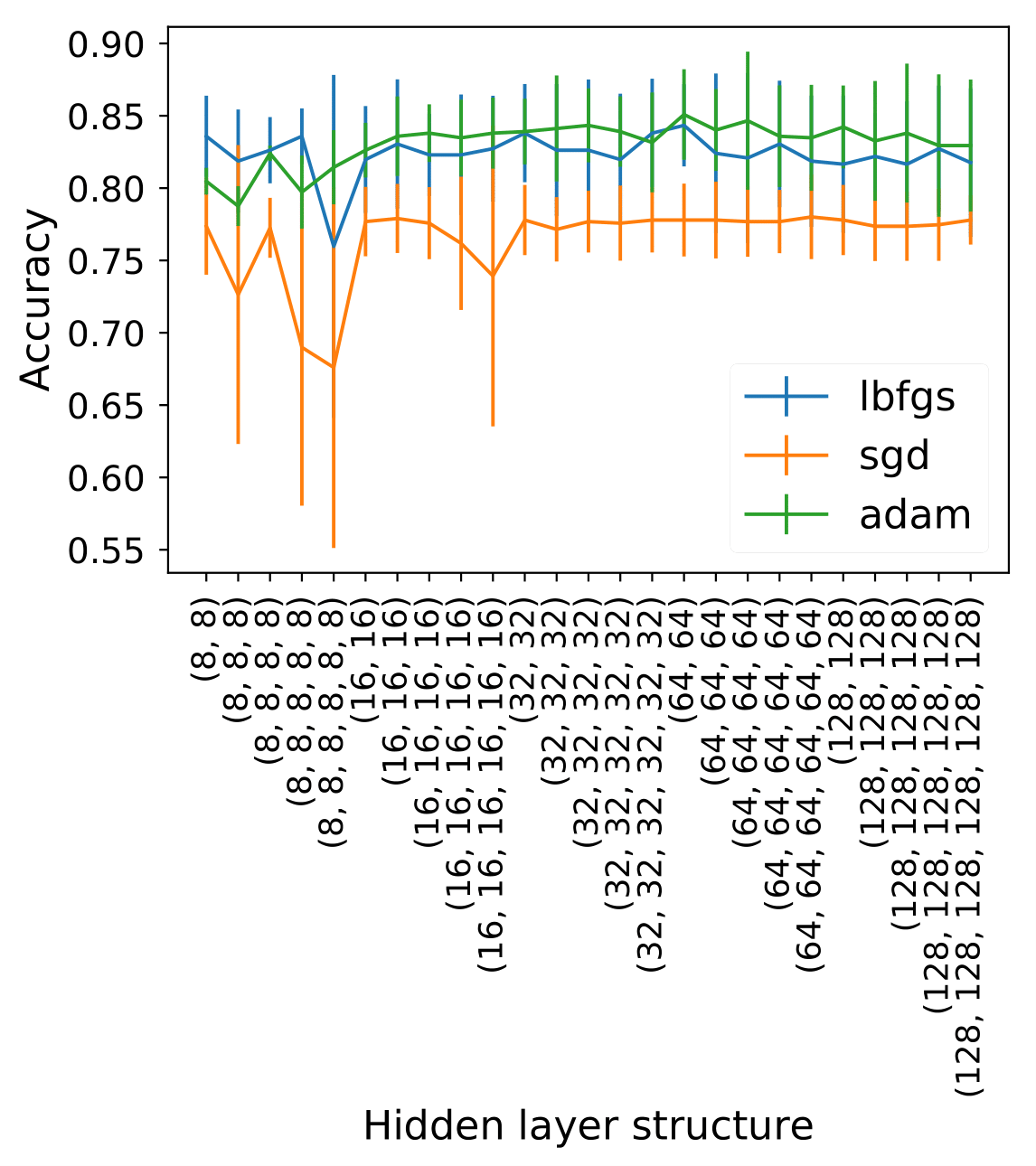

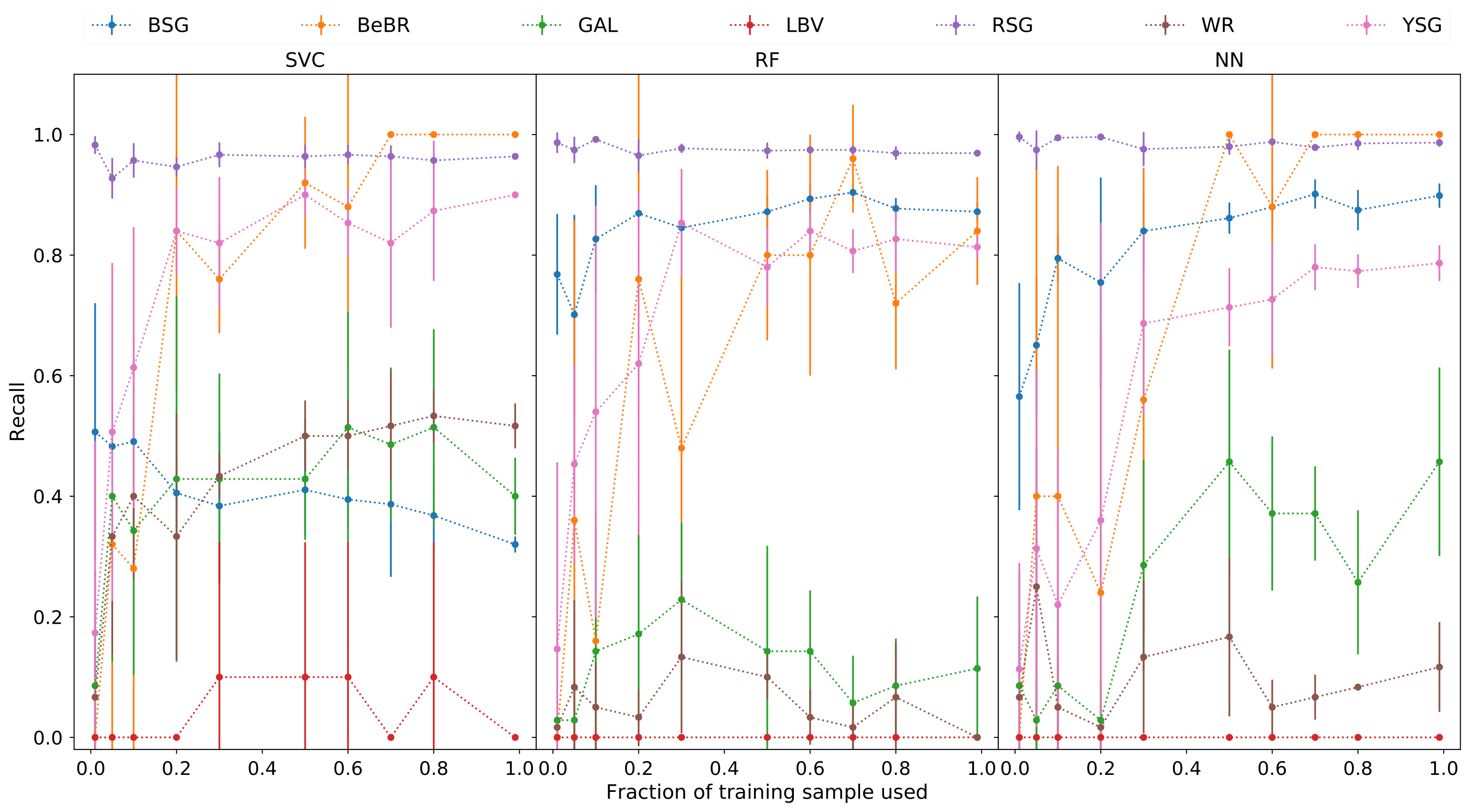

We used the scikit-learn package to implement our algorithms of choice: Support Vector Classifier, Random Forest, and Multi-layer Perceptron (fully connected Neural Network).

Our imbalanced sample is taken care by using the class_weight='balanced' option in all classifiers. We also took into account the multi-label nature of our problem by setting the appropriate parameters to their according values. We performed K-fold Cross Validation in search of the optimal hyper-parameters. For the NN we experimented with different combinations of layers and nodes: